|

I am currently working on something incredibly exciting. I received my Bachelor's degree in 2024 with highest-level honors from Turing class at the School of EECS, Peking University , co-advised by Prof. Hao Dong and Prof. Yaodong Yang. It is worth mentioning that I have a twin brother named Haoran Geng, as our experiences have shown that we are frequently mistaken for one another🤣. Email / Google Scholar / Github / Twitter |

|

|

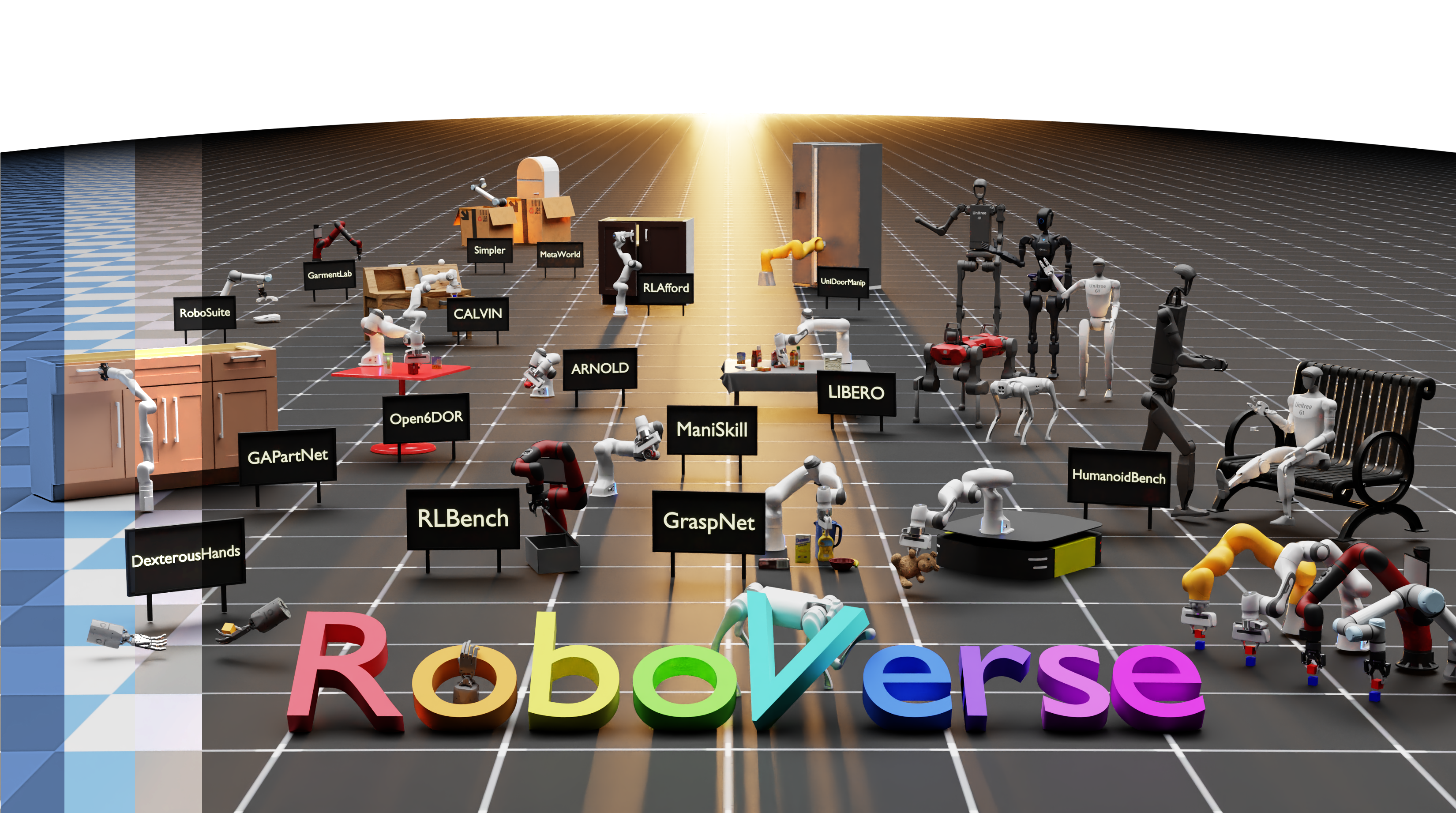

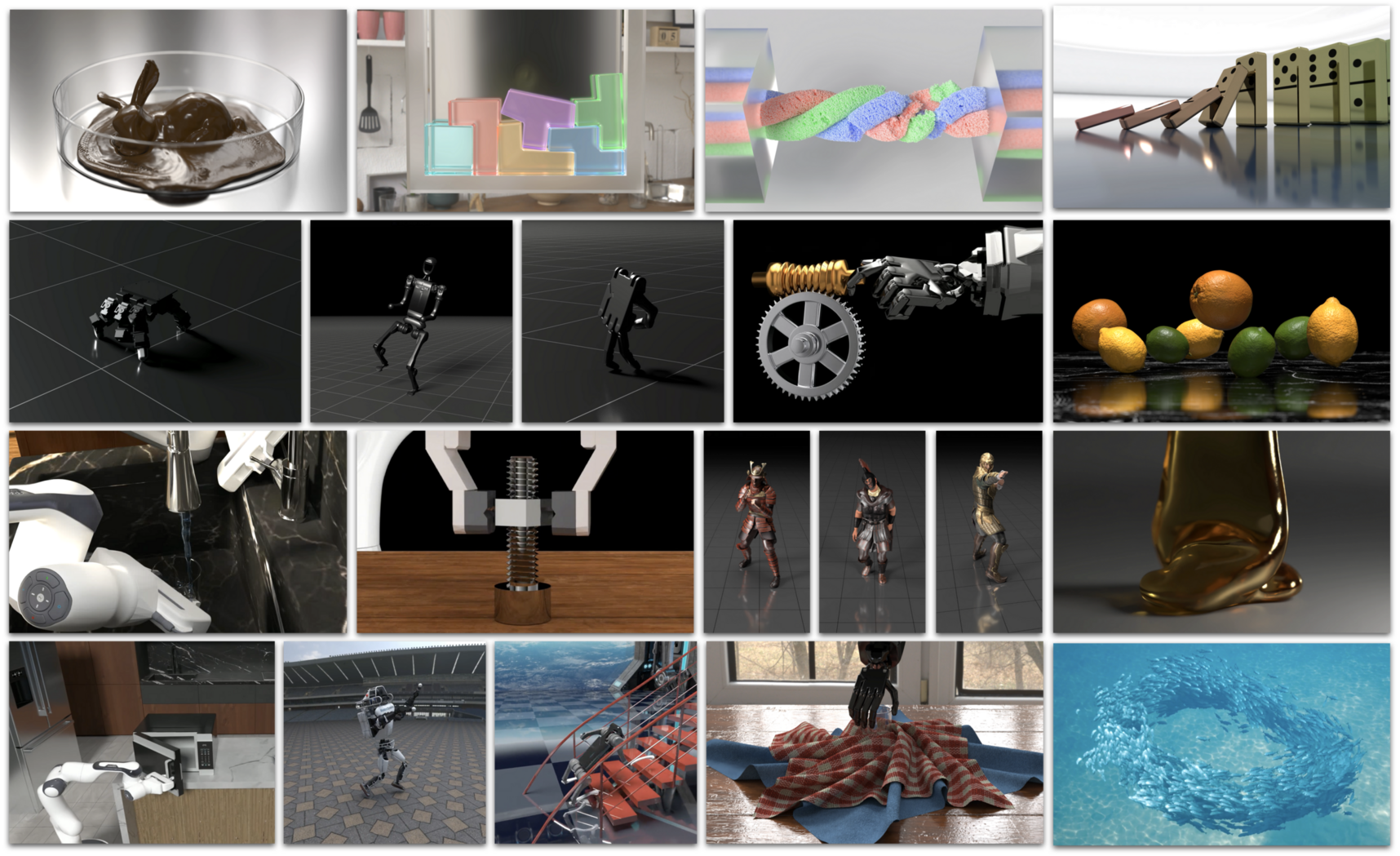

Paper / Project / Code / Bibtex RSS 2025 RoboVerse is a comprehensive framework for advancing robotics through a simulation platform, synthetic dataset, and unified benchmarks. |

|

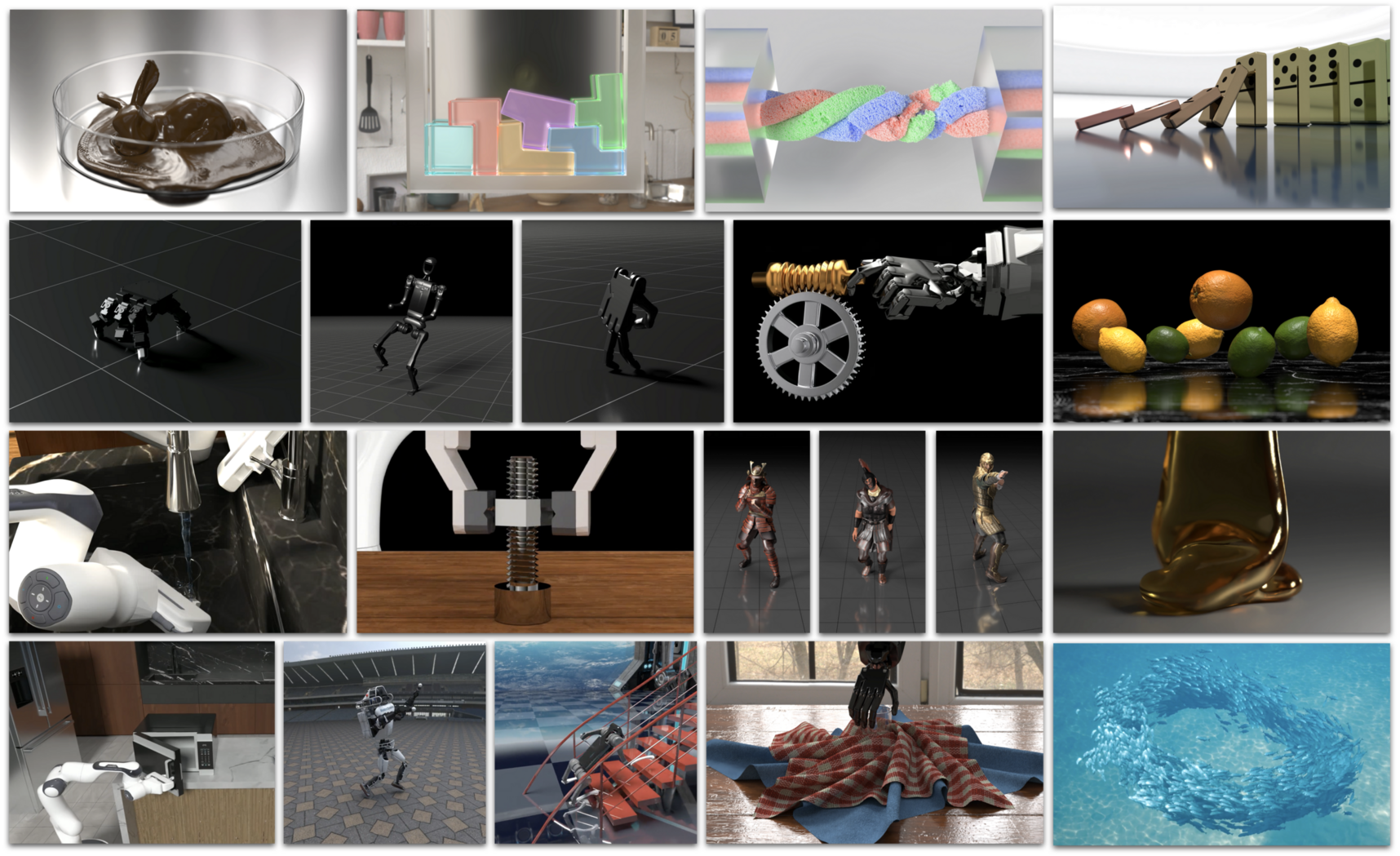

Code / Paper (Coming Soon) / Project Page Release Now! Genesis is a physics platform designed for general-purpose Robotics/Embodied AI/Physical AI applications. |

|

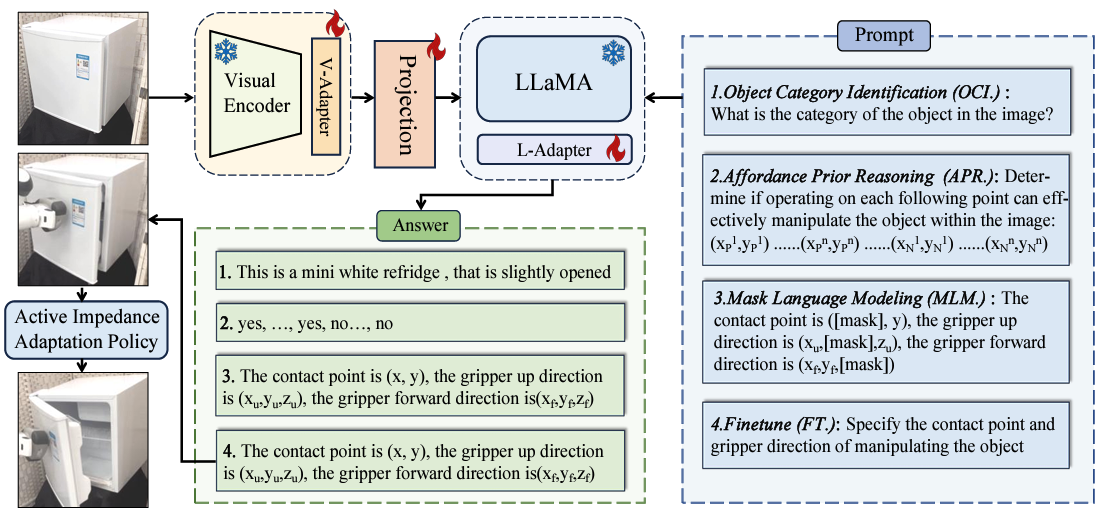

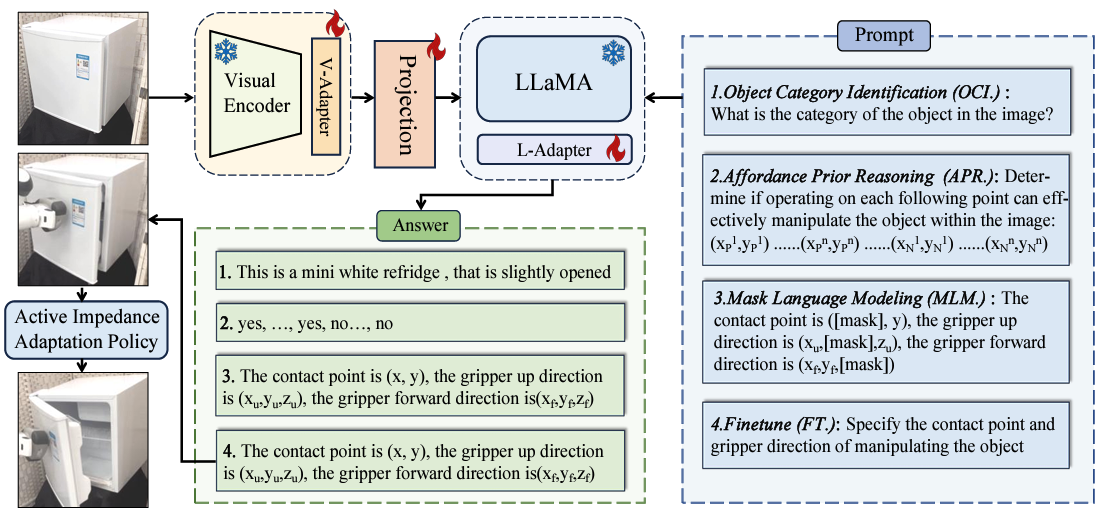

Website / arXiv / Code / 量子位 CVPR 2024 We introduce an innovative approach that leverages the robust reasoning capabilities of Multimodal Large Language Models (MLLMs). |

|

Website / arXiv / Code / DataSet IROS 2024 Oral Presentation RA-L 2024 We propose MultiGrasp, a two-stage framework for simultaneous multi-object grasping with multi-finger dexterous hands. |

|

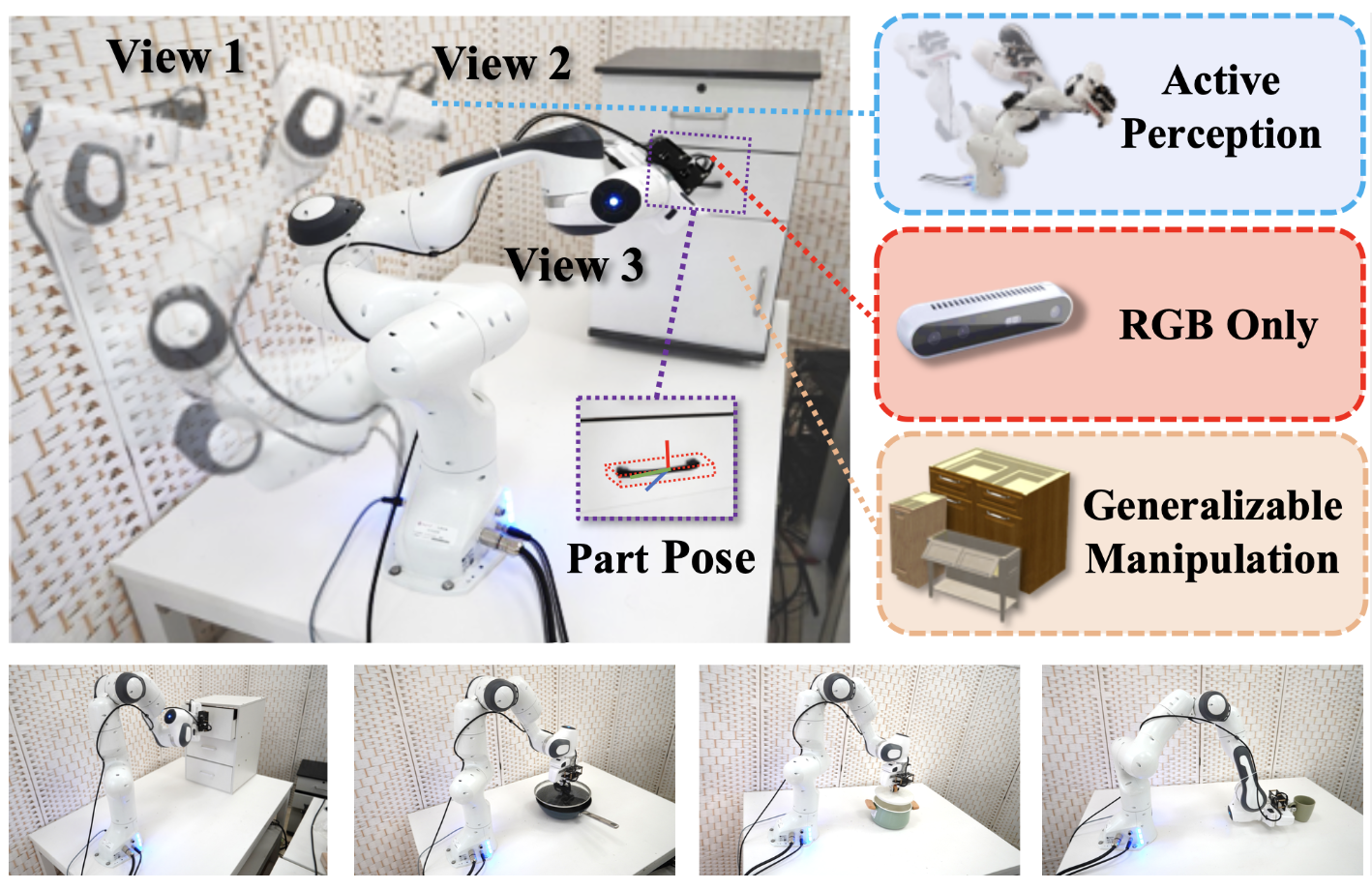

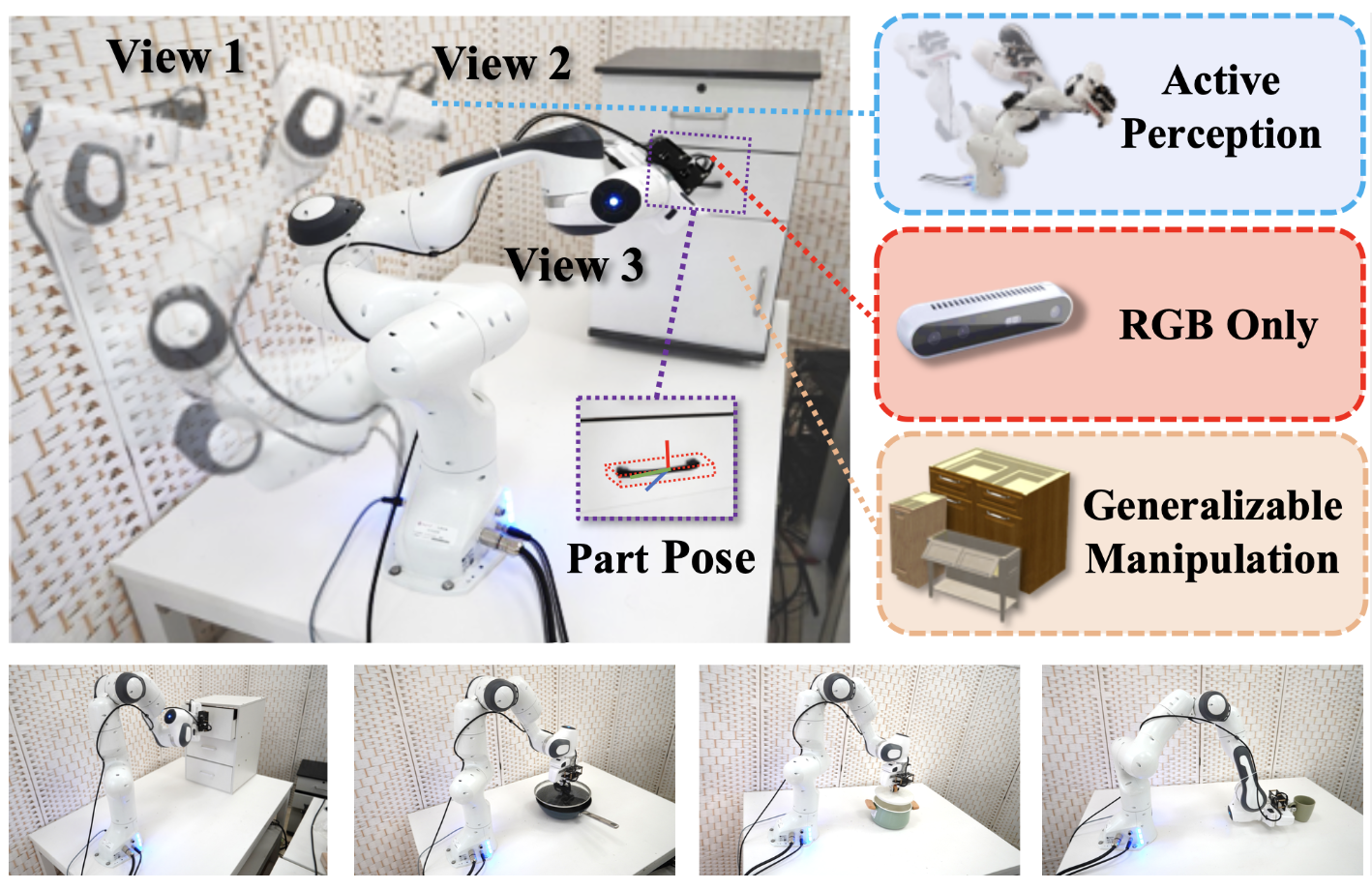

Website / arXiv / Code / Media (CFCS) ICRA 2024 Oral Presentation We propose an image-only robotic manipulation framework with ability to actively perceive object from multiple perspectives during the manipulation process. |

|

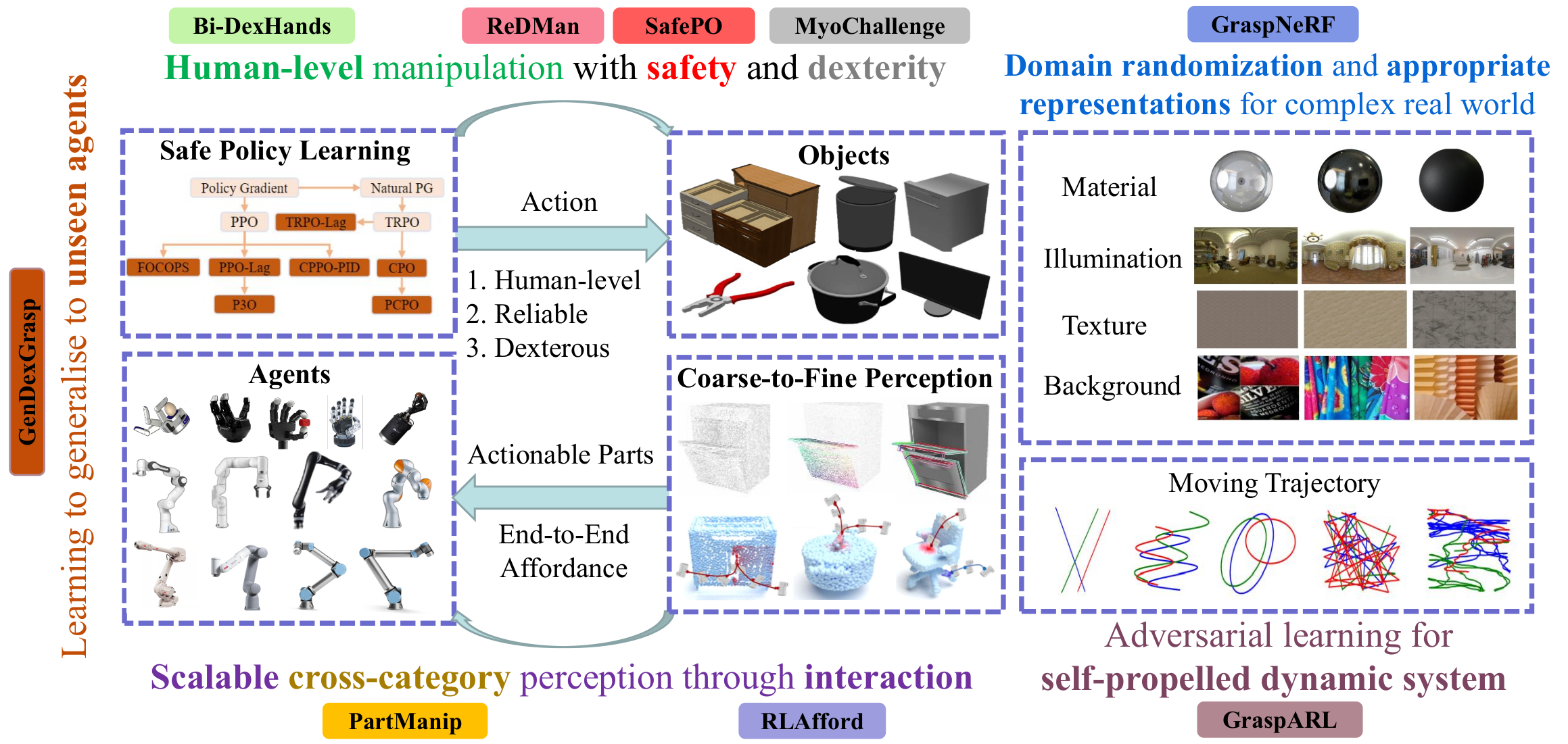

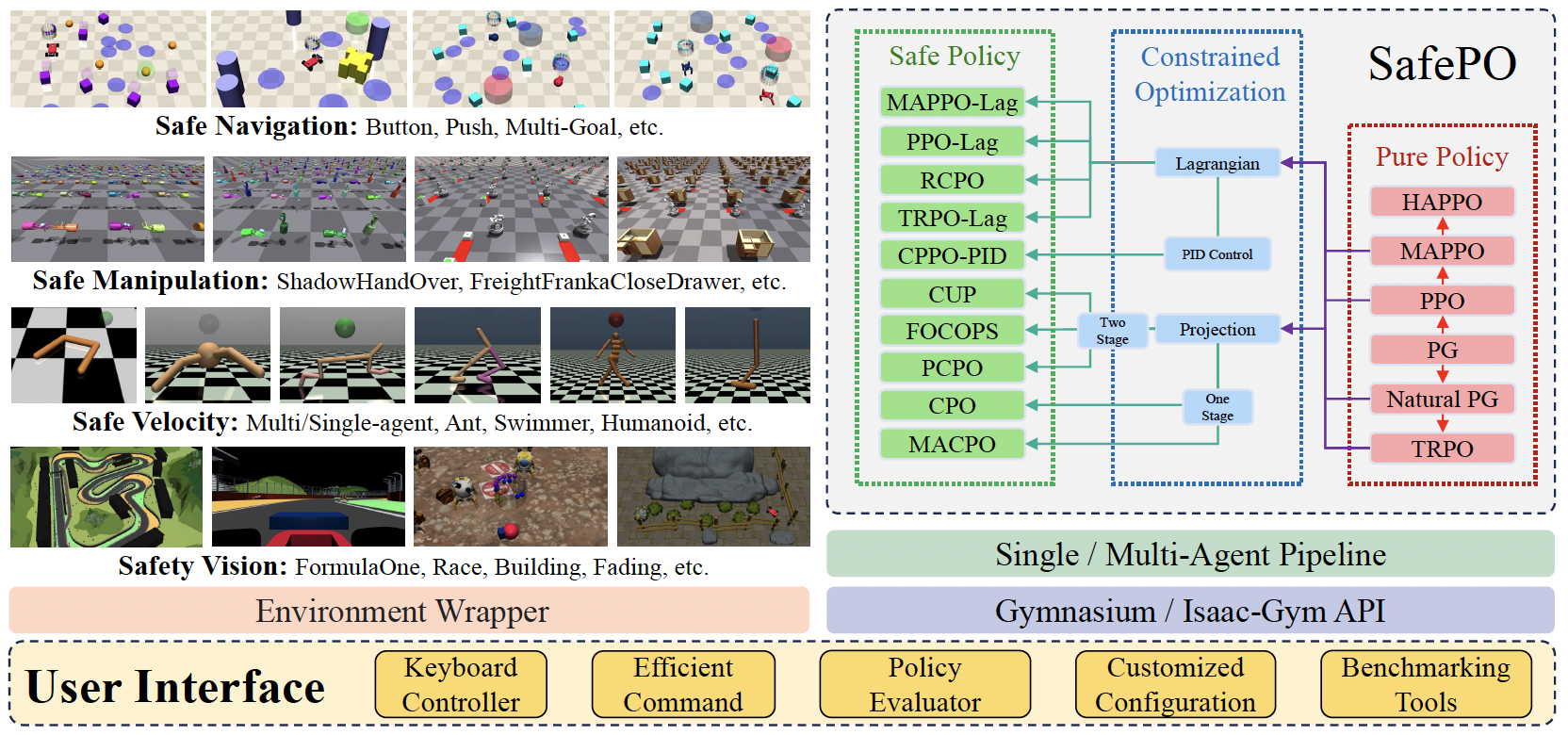

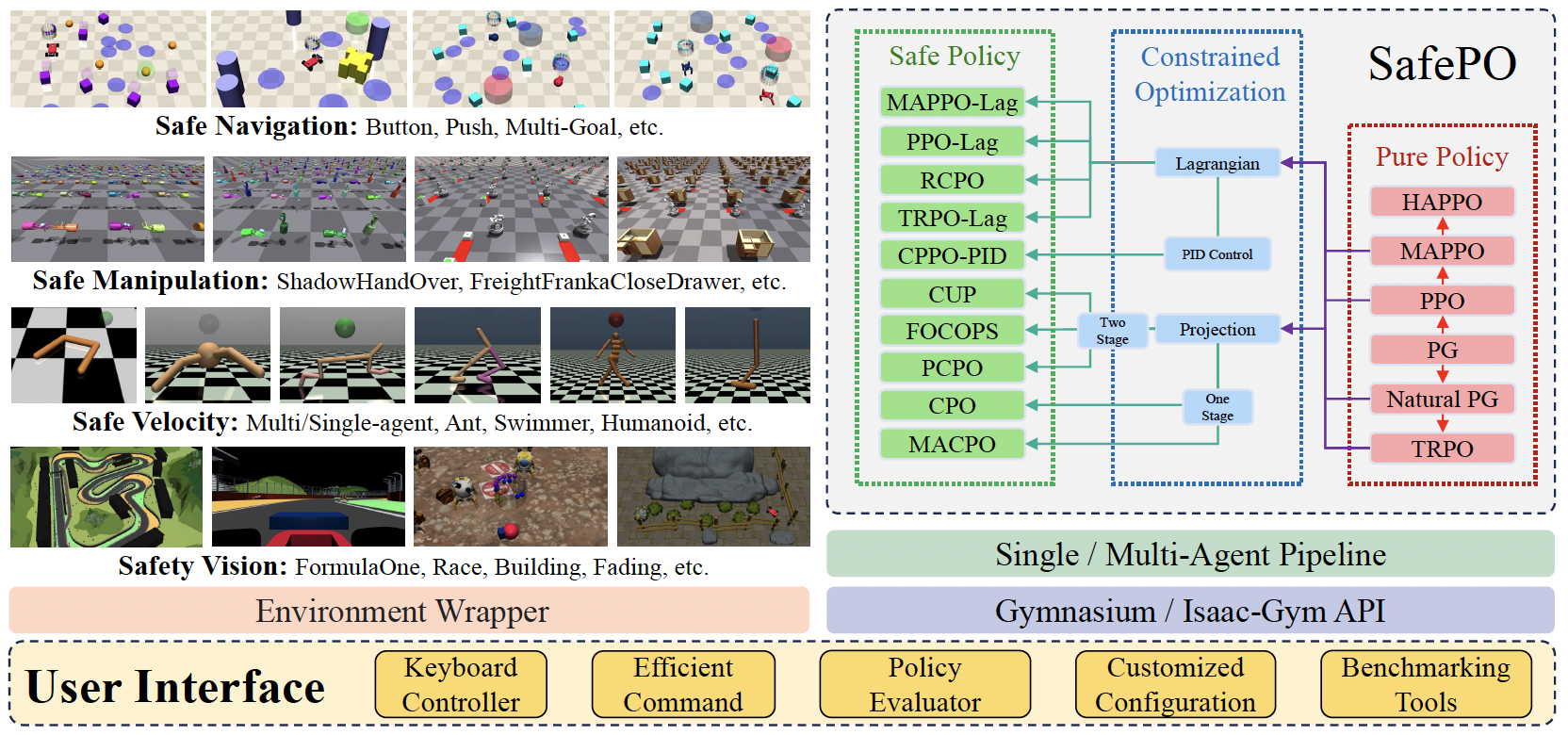

Website / Code (Safety Gymnasium) / Code (SafePO) / Documentation (Safety Gymnasium) / Documentation (SafePO) NeurIPS 2023 We present an environment suite called Safety-Gymnasium, which encompasses safety-critical tasks in both single and multi-agent scenarios and a library of algorithms named Safe Policy Optimization (SafePO). |

|

Paper (Long Version) / Paper (Short Version) / Project Page / Code T-PAMI 2023 NeurIPS 2022 We propose a bimanual dexterous manipulation benchmark according to literature from cognitive science for comprehensive reinforcement learning research. |

|

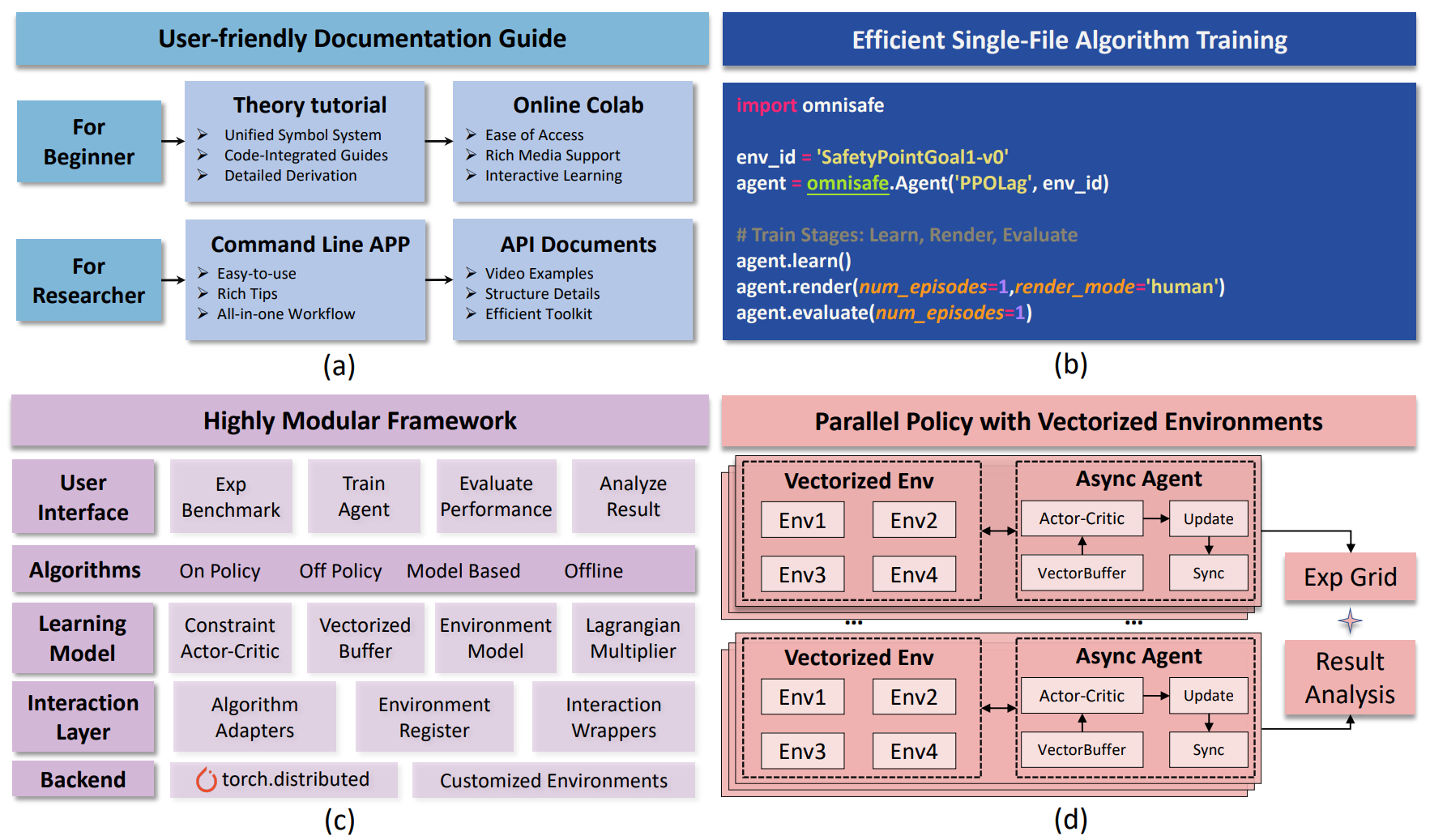

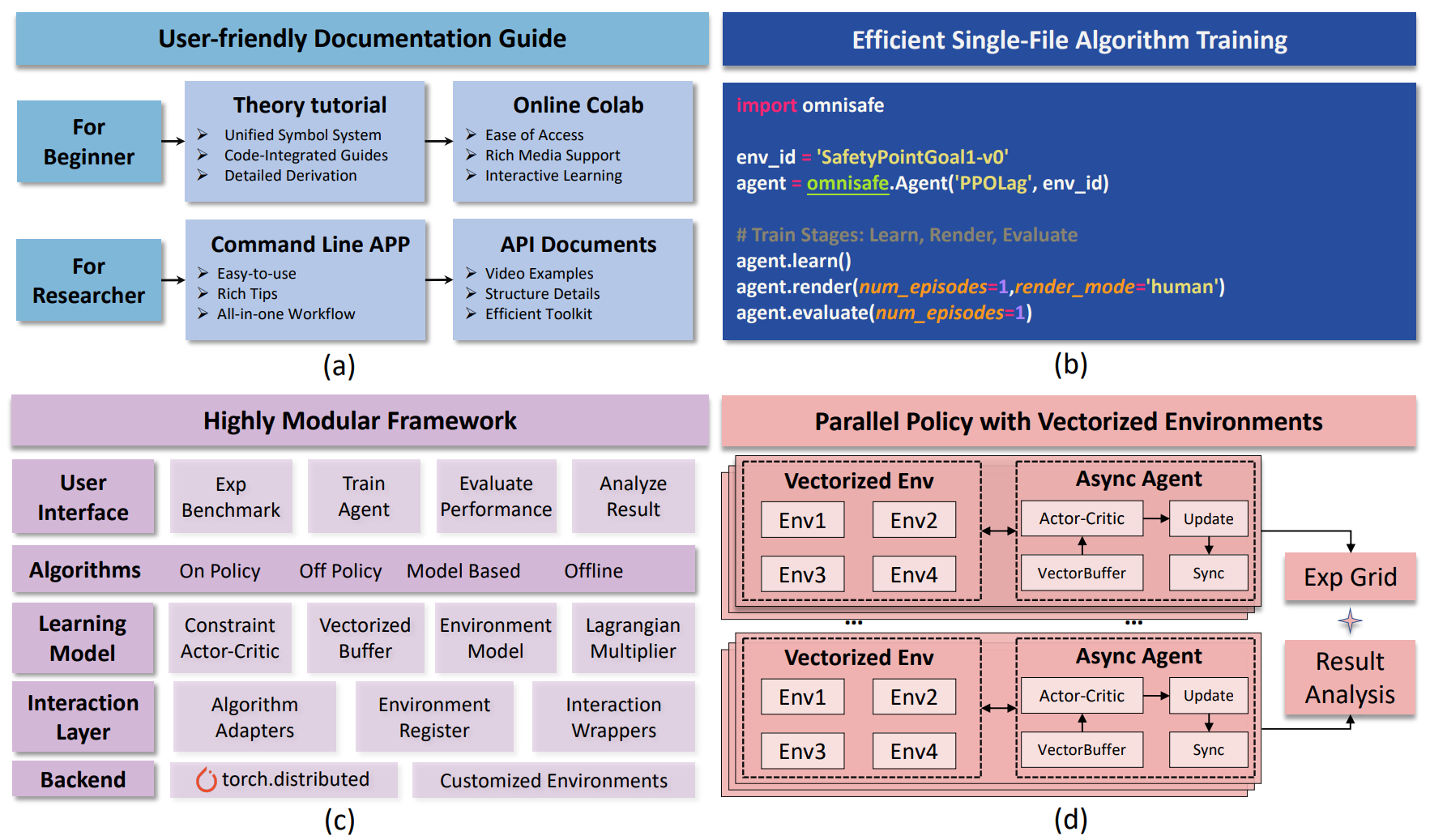

arXiv / Code / Documentation / Community JMLR 2024 (MLOSS: top 15 ~ 20 papers for open-source AI systems per year.) OmniSafe is an infrastructural framework designed to accelerate safe reinforcement learning (RL) research. |

|

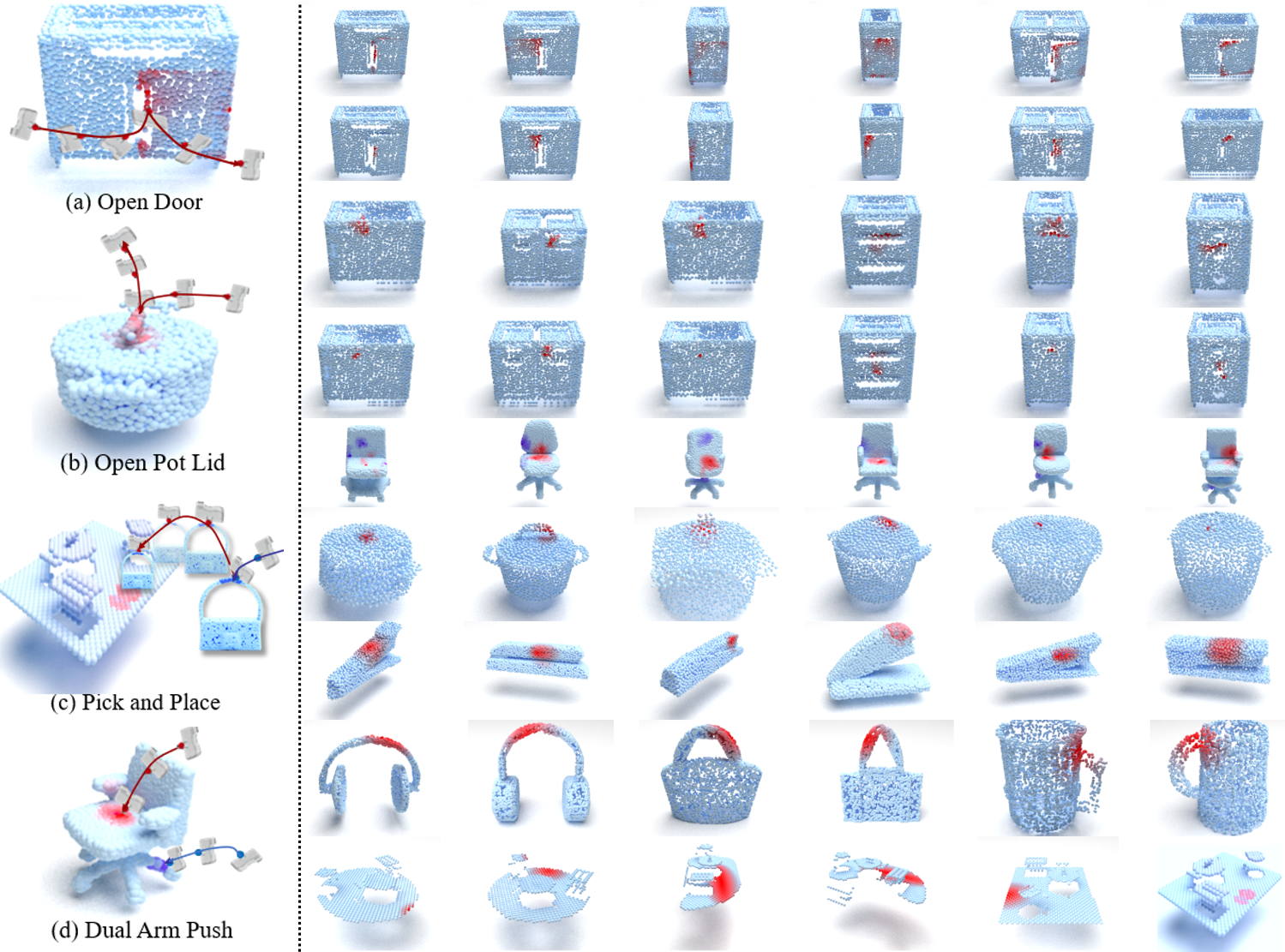

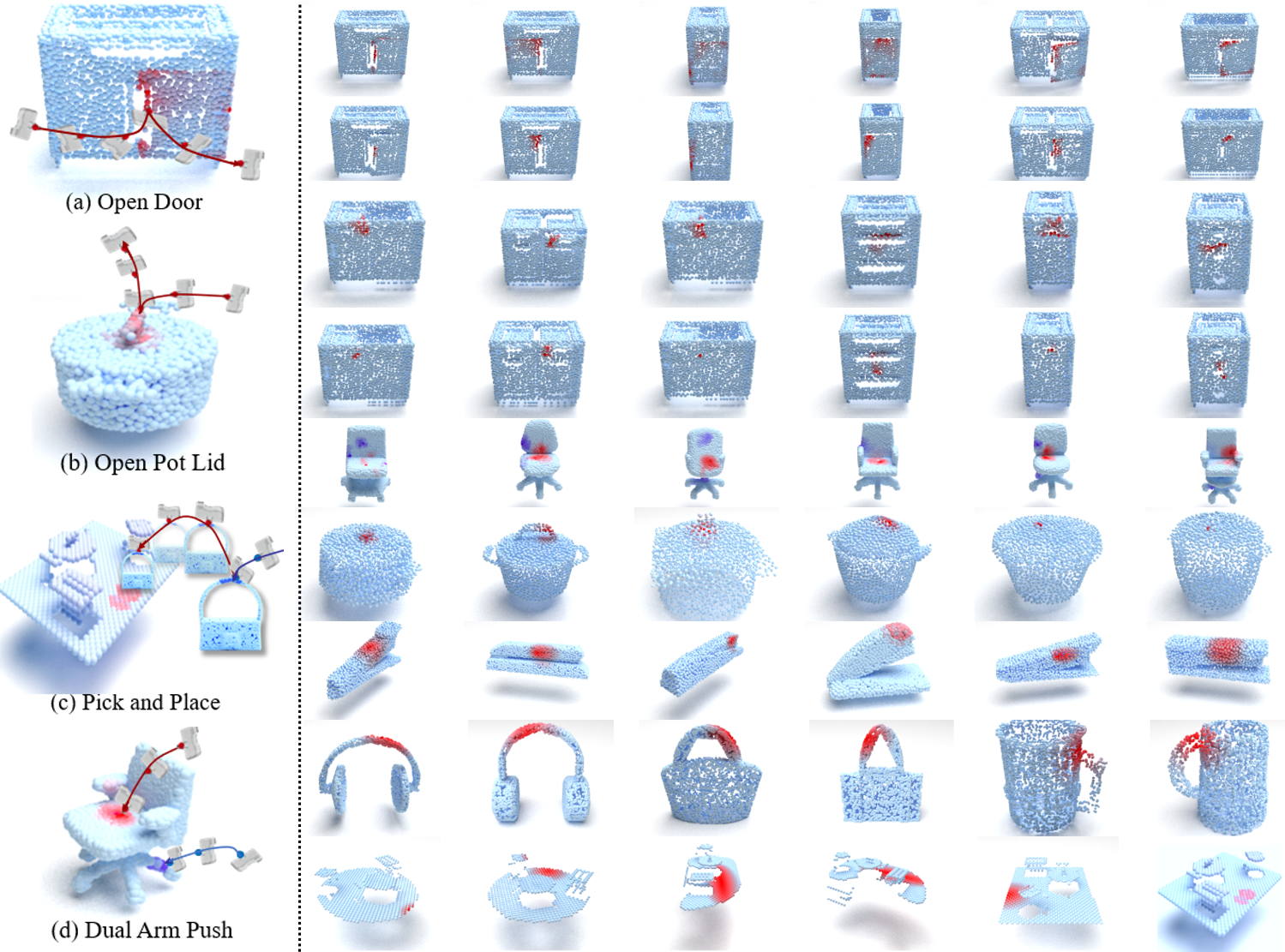

RLAfford: End-to-End Affordance Learning for Robotic Manipulation arXiv / Project Page / Video / Code / Code (Renderer) / Dataset / Media (CFCS) / Media (PKU) ICRA 2023 In this study, we take advantage of visual affordance by using the contact information generated during the RL training process to predict contact maps of interest. |

|

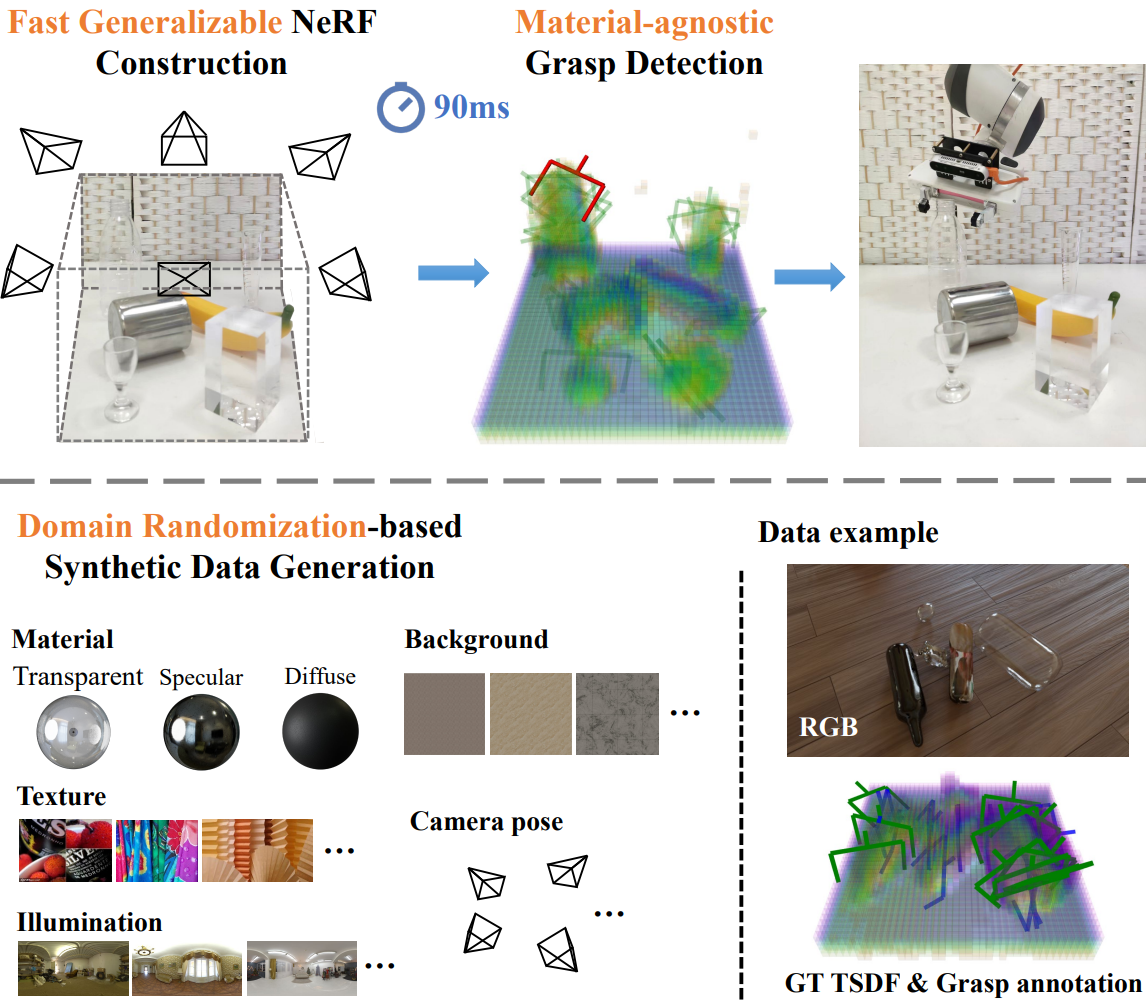

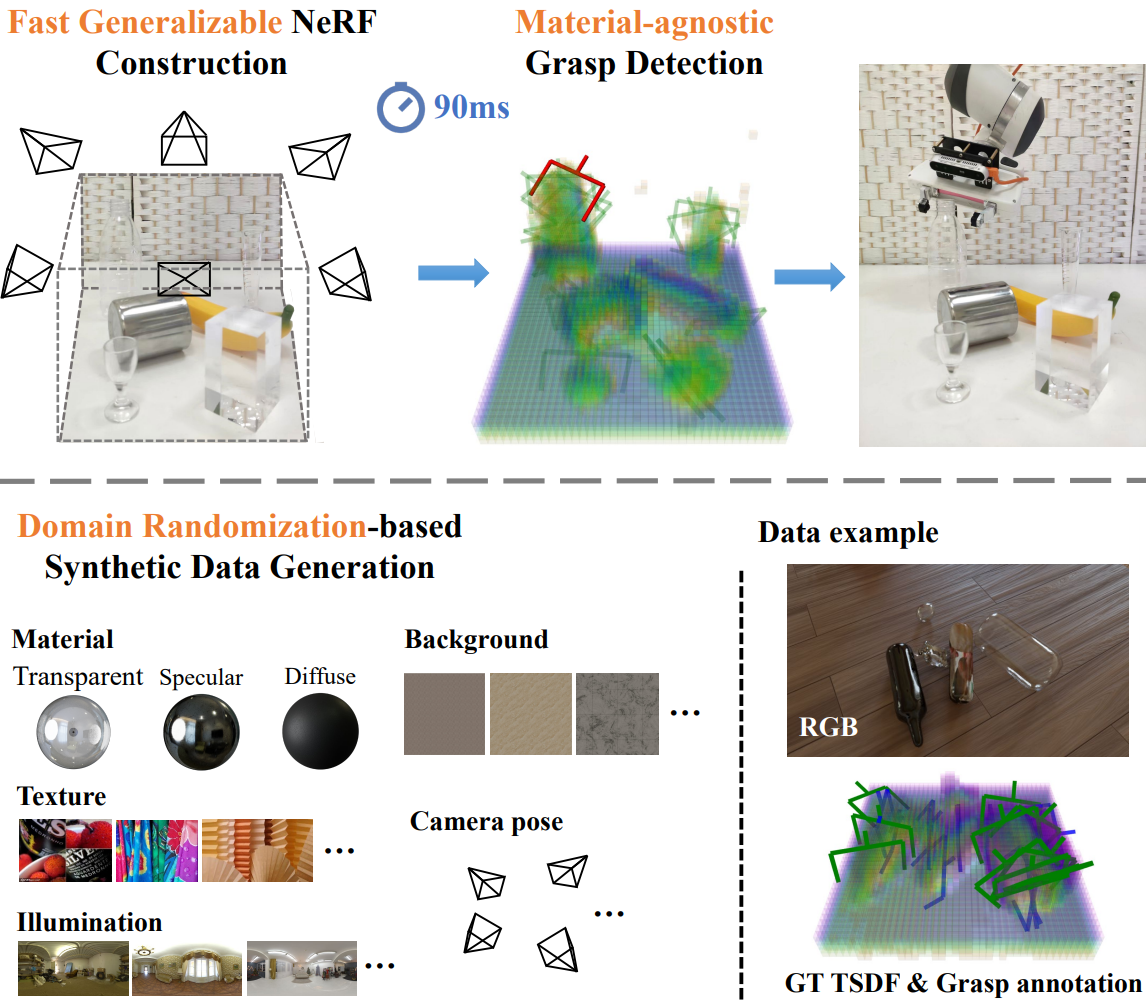

GraspNeRF: Multiview-based 6-DoF Grasp Detection for Transparent and Specular Objects Using Generalizable NeRF arXiv / Project Page / Code ICRA 2023 We propose a multiview RGB-based 6-DoF grasp detection network, GraspNeRF, that leverages the generalizable neural radiance field (NeRF) to achieve material-agnostic object grasping in clutter. |

|

arXiv / Project Page / Code / Dataset / Video / Media ICRA 2023 This paper introduces GenDexGrasp, a versatile dexterous grasping method that can generalize to unseen hands. |

|

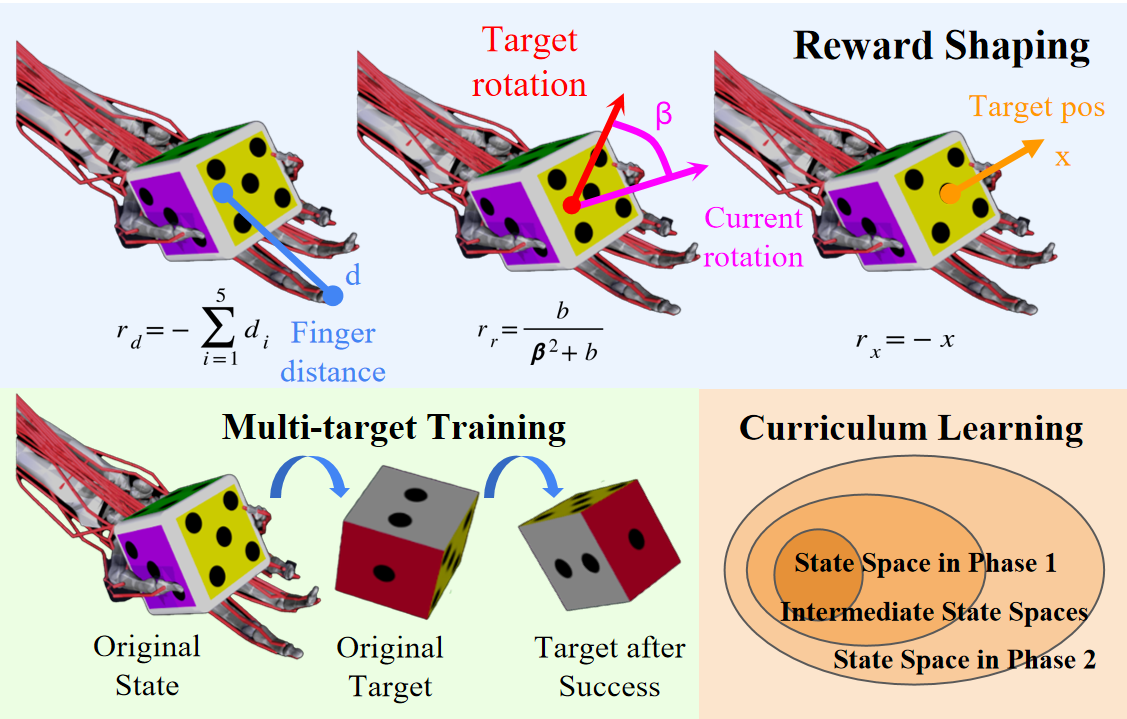

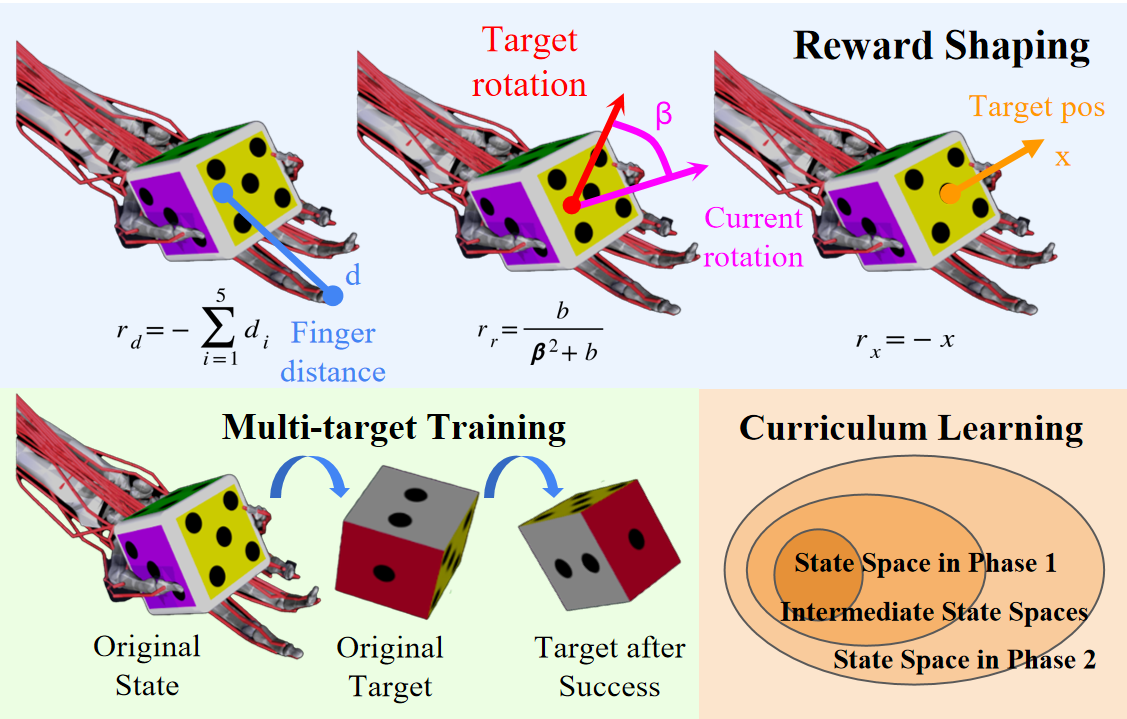

Paper / Challenge Page / Code / Slides / Talk / Award / Media (BIGAI) / Media (CFCS) / Media (PKU-EECS) / Media (PKU-IAI) / Media (PKU) / Media (China Youth Daily) First Place in NeurIPS 2022 Challenge Track (1st in 340 submissions from 40 teams) Reconfiguring a die to match desired goal orientations. This task require delicate coordination of various muscles to manipulate the die without dropping it. |

|

Selected as the Outstanding Thesis of the National Physics Forum 2018 During my high school years, I was selected for the Ministry of Education Talent Program and conducted physics research at Nankai University and presenting my thesis at the National Physics Forum 2018. |

|

Massachusetts Institute of Technology (MIT)

2023.01 - 2023.10 Visiting Researcher Research Advisor: Prof. Josh Tenenbaum and Prof. Chuang Gan |

|

Beijing Institute for General Artificial Intelligence (BIGAI)

2022.05 - 2023.06 Research Intern Research Advisor: Prof. Yaodong Yang Academic Advisor: Prof. Song-Chun Zhu |

|

Turing Class, Peking University (PKU)

2020.09 - 2024.06 Undergraduate Student Research Advisor: Prof. Hao Dong Academic Advisor: Prof. Zhenjiang Hu |

|

|

|

|

|

This template is a modification to Jiayi Ni's website and Jon Barron's website. |